Graviton4 and Trainium2, the New Chips Designed by AWS

The new generation of AWS processors significantly improves power efficiency and performance to support a wide range of workloads, including the most demanding ones.

During the AWS re:Invent conference being held these days in Las Vegas, the vendor announced the availability of its new generation of Graviton and Trainium chips, designed entirely by the company to respond to the increasingly existing workloads in the cloud.

This is the ideal complement to traditional Intel AMD and Nvidia chip-based instances, which will continue to play a major role as part of the AWS compute offering, but these new processors will offer more choice for customers of the world’s largest public cloud provider.

In addition, AWS will reduce its reliance on third-party companies to provide Amazon EC2 computing while significantly increasing scalability and price/performance.

AWS Graviton4

The new generation of Graviton chips, based on 64-bit ARM architecture, improves performance by 30% over its predecessor, while increasing core count by 50% and bandwidth with instance memory by 75%.

To date, AWS has built more than 2 million Graviton processors and already has more than 50,000 customers who have chosen these configurations for their Amazon EC2 instances.

From databases to application servers, web servers, analytics to microservices execution, Graviton4 chips are designed for all of these diverse workloads that require ever-increasing performance while reducing power consumption.

In parallel to this launch, AWS has also announced Amazon R8g instances for EC2, compatible with the new Graviton4 chips. This combination will enable a threefold increase in both the number of virtual CPUs and the amount of memory available on each instance.

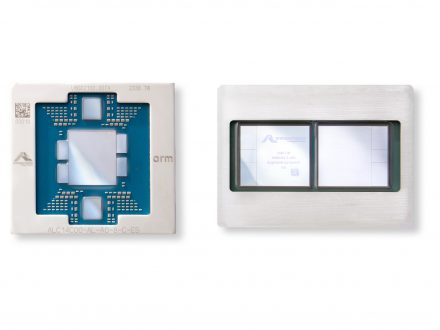

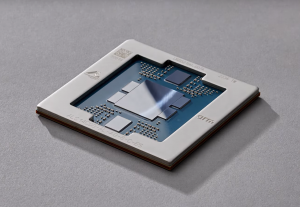

AWS Trainium2

As the name suggests, the new AWS Trainium2 chips are designed specifically for machine learning and training artificial intelligence models, including generative AI.

AWS claims that AI training processes will be 4 times faster than the previous generation of Trainium chips. However, the likely difference maker is the ability to build clusters on Amazon EC2 of up to 100,000 Trainium2 chips to much more quickly and efficiently process any large language model (LLM) or basic model (FM) that has billions of parameters, which is becoming increasingly common in these high-performance domains.

At this level of scale, it is possible to train a 300 billion-parameter LLM in weeks instead of months,” said NVIDIA.

AWS and NVIDIA Expand Partnership

While continuing to focus on hardware for AI and machine learning process computing, AWS also announced a strategic partnership with NVIDIA to deliver new infrastructure, software and services for these types of workloads.

AWS CEO Adam Selipsky invited NVIDIA founder and CEO Jensen Huang to the stage to provide more details about the expanded partnership between the two companies.

As part of this agreement, AWS will be the first cloud provider to provide its customers with NVIDIA GH200 Grace Hopper chips along with NVLink technology to interconnect nodes in the cloud. These supercomputing instances, specialized in generative AI, will make it possible to build true monsters with a capacity never seen before in the cloud thanks to their connection through the high-speed Amazon EFA network.

Another point of this agreement is the collaboration of both companies to provide NVIDIA DGX Cloud (the AI-as-a-Service training designed by NVIDIA) on the AWS public cloud.

Finally, it is worth noting the three new EC2 instances that AWS will begin shipping soon: P5e (with NVIDIA H200 Tensor Core GPUs), G6 (with NVIDIA L4 GPUs) and G6e (with NVIDIA L40S GPUs), for a wide variety of less demanding workloads but which also demand high compute capabilities.