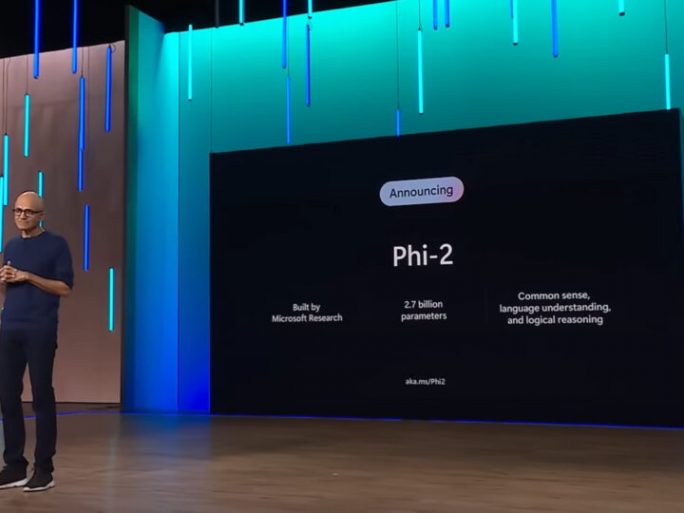

Microsoft Unveils Phi-2, its AI to Compete with Llama 2 and Gemini

The massive increase in the size of language models has unlocked emerging capabilities, redefining natural language processing.

In recent months the Machine Learning Foundations team at Microsoft Research has released a series of small language models (SLMs) called “Phi”, which have achieved remarkable performance in a variety of tests.

Following Phi-1, which achieved leading performance in Python coding, and Phi-1.5, which excelled in common-sense reasoning and language understanding, Microsoft has just introduced Phi-2, a language model with 2.7 billion parameters that demonstrates outstanding reasoning and language understanding.

Key innovations

The massive increase in the size of language models has unlocked emerging capabilities, redefining natural language processing. The key question is whether emerging skills can be achieved at a smaller scale through strategic choices in training, such as data selection.

Phi models at Microsoft have sought to answer this question, achieving performance comparable to larger scale models. The two main approaches to breaking the conventional scaling laws of language models with Phi-2 are:

- Training data quality: The quality of the training data plays a crucial role in model performance. At Microsoft they have focused on “textbook quality” data, using synthetic datasets created specifically to teach the model common sense reasoning and general knowledge. In addition, the training corpus has been augmented with web data carefully selected for its educational value and content quality.

- Knowledge Transfer at Scale: Using innovative techniques it has scaled up from the previous Phi-1.5 model of 1.3 billion parameters, embedding its knowledge into Phi-2 with 2.7 billion parameters. This knowledge transfer not only accelerates training convergence but also clearly improves Phi-2 test scores.

Training details

Phi-2 is a Transformer-based model with a next-word prediction objective, trained on 1.4 billion multi-pass tokens on synthetic and web datasets for NLP and coding. Phi-2 was trained on 96 A100 GPUs over 14 days. Despite not being aligned through human feedback reinforcement learning (RLHF) and not being tuned by instructions, it has been observed to perform more favourably in terms of toxicity and bias compared to existing open source models that did undergo alignment.

Phi-2 has outperformed larger models in several benchmarks, including Mistral and Llama-2. Despite having only 2.7 billion parameters it outperforms larger models by up to 25 times on complex tasks such as coding and mathematics. In addition, Phi-2 compares favourably with the recently announced Google Gemini Nano 2, despite its smaller size.