Significantly Reducing AI Energy Consumption

Researchers at the Technical University of Munich have developed a new training method for neural networks that is significantly more energy-efficient.

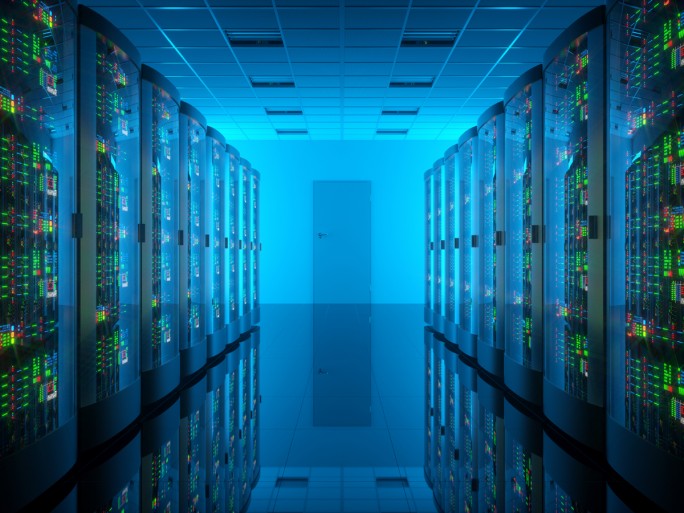

In 2020, data centers in Germany consumed approximately 16 billion kilowatt-hours of energy—about one percent of the country’s total electricity demand. Projections for 2025 estimate an increase to 22 billion kilowatt-hours. In the coming years, increasingly complex AI applications will further raise the demands on data centers, as training neural networks requires enormous computing resources. To counteract this trend, researchers have developed a method that is one hundred times faster while delivering results comparable in accuracy to existing training methods. As a result, the energy required for training is significantly reduced.

Traditional AI Training Methods Require Many Iterations

Neural networks, which are used in AI for tasks such as image recognition and natural language processing, are inspired by the human brain. They consist of interconnected nodes, known as artificial neurons. These neurons receive input signals, which are then weighted and summed according to specific parameters. If a defined threshold is exceeded, the signal is passed on to the next nodes.

Training these networks typically begins with randomly chosen parameter values, often following a normal distribution. These values are then gradually adjusted to improve the network’s predictions. Since this training method requires numerous iterations, it is extremely computationally intensive and consumes a large amount of energy.

Selecting Parameters Based on Probability Calculations

Felix Dietrich, Professor of Physics-Enhanced Machine Learning, and his team have now developed a new method. Instead of determining the parameters between nodes iteratively, their approach is based on probability calculations. The probabilistic method specifically selects values that occur at critical points in the training data—where values change most significantly and rapidly.

The current study aims to use this approach to learn energy-conserving dynamic systems from data. Such systems evolve over time according to specific rules and can be found in applications such as climate modeling and financial market analysis.

"Our method makes it possible to determine the required parameters with minimal computational effort. This allows neural networks to be trained significantly faster and, as a result, more energy-efficiently," explains Felix Dietrich. "Moreover, our research has shown that the new method achieves an accuracy comparable to that of iteratively trained networks."